cc - week 8 - fscape soundhack and the nn-19

Fscape took me a while to be able to effect sounds. I wonder why that open option in the menu exists ? I couldn't get it to work.

I also noticed a few sounds generated with one of the effects (something to do with Bloss) created a bit of serious DC offset - and Peak doesn't deal with that (ProTools does :).

Didn't really understand what SoundHack or Fscape were doing. Just twiddled settings until a product was settled upon.

They do seem quite interesting and look forward to more fun with them.

When editing files,I am used to using 1 and 2 to navigate to beginning/end of selection, am yet to work out how to in Peak. Makes it a tad unwieldy when looping not being able to jump around.

Got a bit more into the sample settings in the NN-19. Root key, key range and level. Last week everything worked straight up, this weeks sounds enjoyed it !!

Apart from that the sampler was pretty much like last week, set it up similarly (velocity and mod wheel modulating filter, fader modulating amp env release, bit of delay and compression), but used a bit of LFO on the filter (BPF again) this time AND found the pitch modulation !! I did cut the whammy out because it went to about 1.5 minutes, too long :( Still can't believe I missed it last week.

Track: cc week 8.mp3

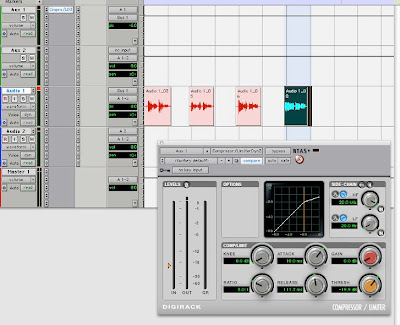

Screen shot.